Evolution of Wireless Communication Technology in Hearing Aids

Navid Taghvaei, AuD and Sonie Harris, AuD

The Features and Benefits of Signia E2E Innovations

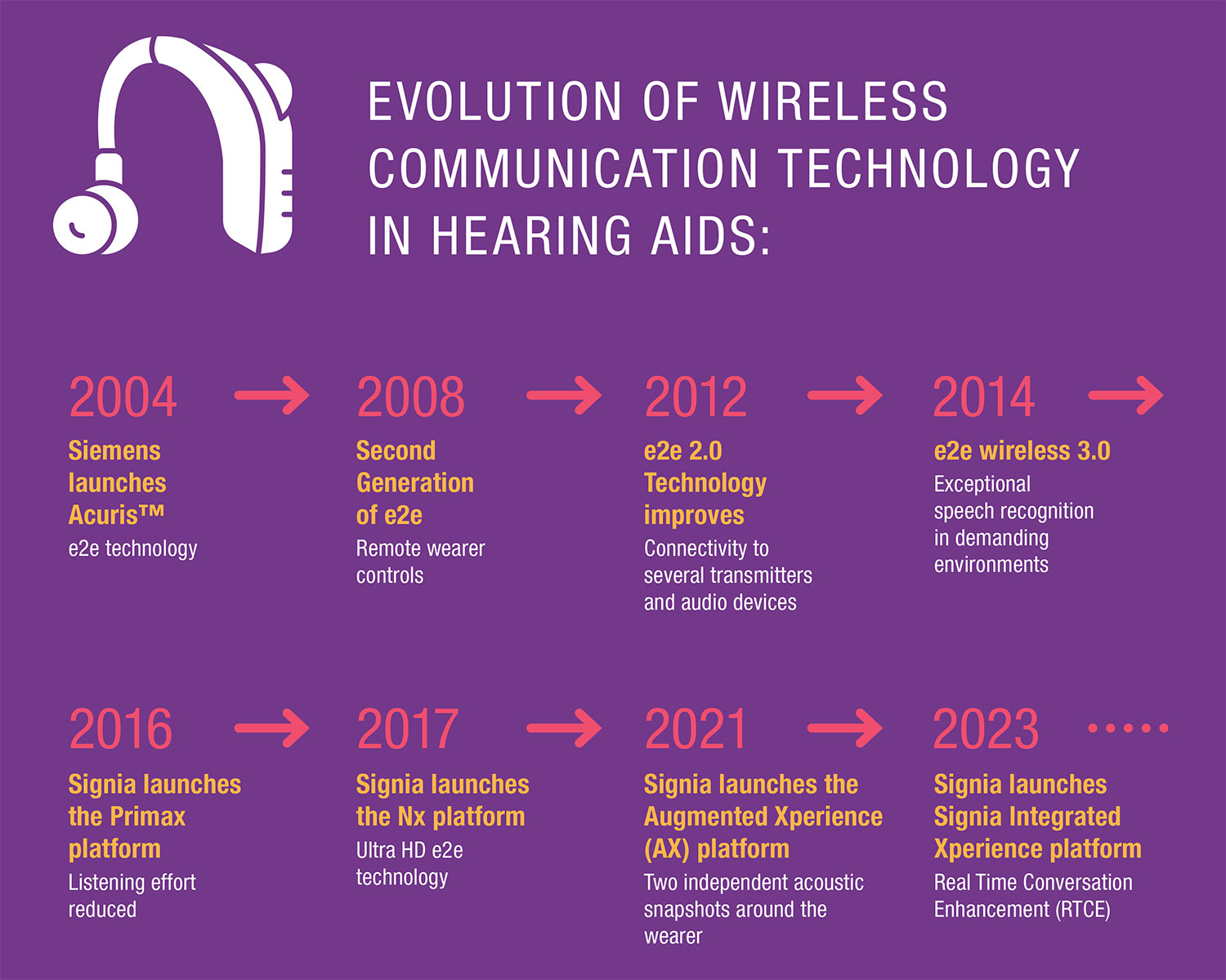

Wireless communication between hearing aids is an excellent example of how hearing aids in the digital era, which began in the late 1990’s, have evolved over time. As this tutorial demonstrates, with these incremental improvements come added wearer benefit. Although the focus here is on Signia technology, the evolution of wireless technology offered by other hearing aid manufacturers tracks in a similar way; consequently, leading to overall wearer satisfaction increases from about 60% in 1998 to upwards of 85% in 2022, as measured by MarkeTrak surveys.

Hearing aid wearers have not always enjoyed the benefits of wireless technology. In October 2004, nearly 20 years ago, Siemens launched a new product, Acuris™ which was, for the first time, embedded with wireless technology called e2e (ear-to-ear). The first of its kind, e2e technology opened new pathways for future product developments. For the uninitiated, e2e wireless communication between the hearing instruments sends and receives signals during normal operation of the hearing instruments to continually share information about the current listening environment and control settings. The technology can also trigger additional information and data transmission, both through user controls and between the hearing instruments simultaneously.

This e2e wireless technology was the first of its kind to efficiently use electromagnetic transmission and send coded digital information alternating on two frequencies, 114 kHz, and 120 kHz. e2e uses a form of modulation called Frequency Shift Keying (FSK) and with a typical current consumption of 120 μA, the technology is extremely efficient in power consumption. Operating on this narrow frequency band, it assures wireless functionality with virtually no interference. Even though FSK modulation is used in Bluetooth-enabled applications, this form of electromagnetic transmission, also called Near Field Magnetic Induction (NFMI), is not a Bluetooth enabled application. NFMI is well suited for wireless communication over short distances, thus it is ideal for ear-to-ear transmission between two devices that are worn on the head.

Fifteen years ago, e2e wireless synchronized hearing instrument digital processing using activation of noise reduction algorithms and linked hearing instruments so that they reach the same signal processing characteristics at precisely the same time. It made it possible to control both left and right hearing instruments with one control for volume and one for program selection. The two hearing instruments were designed to work together as a single unified system to simplify the fitting and wearing of two hearing instruments. There are many advantages of using this wireless technology: maintaining binaural signal processing, excellent sound quality without signal drop, optimizing the accuracy of signal classification, expanding options on smaller custom instruments, and overall ease of use.

It is well established that there are many benefits to binaural hearing, including improved signal to noise ratio (SNR), better auditory localization, loudness summation, reduction of head shadow effect, and improved sound quality (Mueller & Hall, 1998). Hearing instrument wearers, who use two 20 AUDIOLOGY PRACTICES n VOL. 15, NO. 4 independent hearing instruments, gain a bilateral advantage; however they do not necessarily enjoy the benefit of binaural hearing (Noble & Byrne, 1991). e2e wireless communication ensures that both instruments analyze, interpret, and react together as a single system, thus empowering hearing instrument wearers to take full advantage of binaural hearing.

The main benefit of binaural hearing is improvement in localization, using interaural time and intensity cues. Independent bilateral hearing aid fittings can easily compromise and mismatch the volume settings on hearing instruments worn by patients, affecting these necessary auditory cues (Hornsby & Ricketts, 2004). Another benefit of consistent gain matching relates to the binaural squelch effect, sometimes referred to as the binaural intelligibility level difference, or the binaural masking level difference (Zurek, 1993). Binaural squelch effect provides an improvement in the SNR. This improvement in binaural fittings can provide an advantage of 2.5 to 3.0 dB for listening in background noise over a unilateral fitting, which demonstrates that individuals with hearing loss also experienced binaural squelch benefits (Ricketts, 2000). As e2e wireless technology helps maintain loudness symmetry, it should also help maximize the wearer’s binaural squelch and redundancy (Jerger et al. 1984).

In addition to interaural time and intensity cues, wireless e2e processing synchronizes directional microphones, which has a significant effect on speech intelligibility. The most important aspect of research in this area is the fact that matched directional microphone modes in both hearing instruments significantly increase speech understanding up to 4.5 dB in noise when compared to mismatched microphone settings (Hornsby & Ricketts, 2005; Mackenzie et al. 2005). Wireless e2e technology not only provides significant improvements in “ease of use,” but also improves the wearer’s overall listening comfort and speech understanding by synchronizing decision-making and signal processing.

Wireless Connectivity

In 2008, e2e wireless 2.0 was introduced in tandem with remote wearer controls. This 2nd generation of e2e technology enabled audio signals from external devices to be streamed wirelessly to the hearing instruments in stereo, with no audible delay. For the first time, hearing aid wearers could watch TV, listen to music, and telephone conversations wirelessly, essentially turning their hearing instruments into personal headsets. In addition to significant audiological advantages, because the microphone could be placed closer to the sound source and transmitted directly to hearing aids considerable distance away, this wireless connection significantly improved the SNR of the listening environment. A wearer benefit that is especially relevant for telephone communication. In a bilateral fitting, the phone signal transmits to both hearing instruments, allowing the wearer to take advantage of binaural redundancy and central integration, which can improve the signal to noise ratio by 2 to 3 dB (Dillon, 2001).

By 2012, e2e wireless 2.0 technology improved further by offering connectivity to several transmitters and audio devices. These wearer benefits of e2e technology corresponded with the rising popularity of smartphones. Hearing aid wearers could take advantage of multiple applications on their favorite gadgets simultaneously. This meant that they could have video calls on their laptop then transition seamlessly back to their smartphones. Wearers could now take turn-by-turn instructions from their favorite navigation app or listen to their favorite songs from their smartphones simultaneously. It was now possible to continue to watch TV and listen at their preferred volume without disturbing others via streaming from their favorite tablets or streaming platforms.

One of the most impressive innovations in the hearing instruments at the time featured automatic learning or “trainable hearing instruments.” Signia hearing instruments (Siemens at the time) could automatically learn through the Sound- Learning algorithm, ensuring that every time the patient makes an adjustment to loudness or frequency response, both hearing instruments record the specific listening situation. This was done based on the acoustic sensor system, the sound pressure level of the input, and the patients’ desired gain in frequency response in synchrony. In a matter of weeks, it was possible for the learning instruments to map out the wearer’s’ preferences and then automatically adjust to that setting when that given listening situation was detected again.

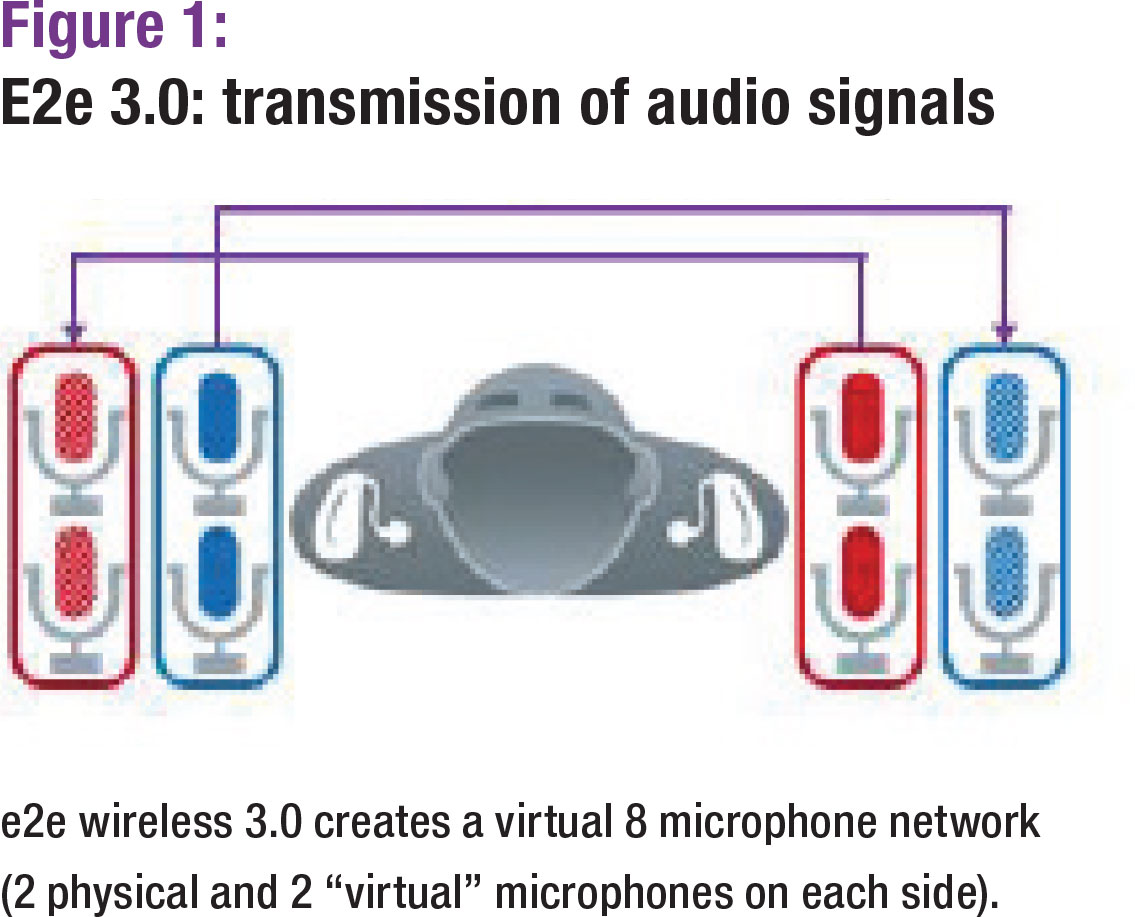

In 2014, e2e wireless 3.0 enabled a series of new algorithms, which provided signal processing that empowered hearing aid wearers to have better speech recognition in demanding listening environments than people with normal hearing (Kamkar-Parsi, et. al, 2014). This 3rd generation NFMI system could transmit dual-microphone bidirectional audio data from ear to ear, creating a virtual 8-microphone network (Figure 1). To achieve this sophisticated communication, the effective inter-aural data rate of e2e 3.0 was raised by a factor of 1000 compared to e2e 2.0. The e2e 3.0 achieved this without size or battery drain drawbacks due to the use of a dedicated design for hearing instruments. This included the choice of frequency band, the design of the analog and digital transmission system, as well as the system integration into the hearing instrument.

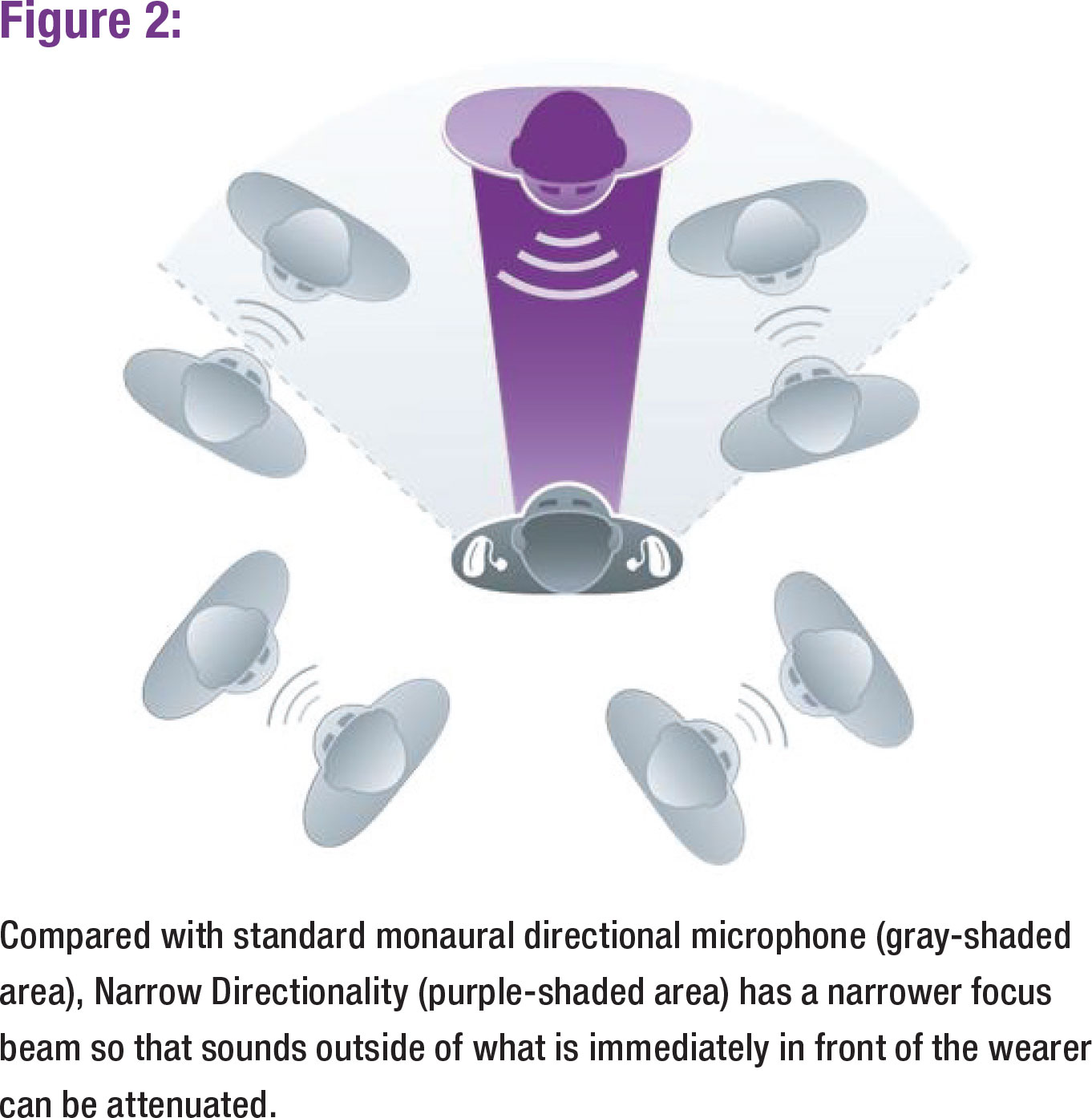

Through the wireless transmission and sharing of audio information between the two hearing instruments with e2e wireless 3.0, it is possible to make calculations involving the wearer’s auditory scene that were not previously possible. Particularly, to focus and enhance the hearing instrument output for signals of interest—most commonly target speech—while simultaneously minimizing the output from other spatial orientations where the input is not desirable. In Signia devices, this was accomplished through two different algorithms referred to as Narrow Directionality and Spatial SpeechFocus. With Narrow Directionality, the directional polar pattern has a very narrow focus to the front (e.g., the look-direction of the hearing instrument wearer). The output of the hearing instruments is significantly reduced for all inputs falling outside of the narrow focus (Figure 2), even if they are located right next to the target speech source. This provided a SNR advantage not observed in previous directional instruments. This SNR advantage was easily achieved simply by the wearer looking at the talker of interest, as illustrated in Figure 2.

Through the wireless transmission and sharing of audio information between the two hearing instruments with e2e wireless 3.0, it is possible to make calculations involving the wearer’s auditory scene that were not previously possible. Particularly, to focus and enhance the hearing instrument output for signals of interest—most commonly target speech—while simultaneously minimizing the output from other spatial orientations where the input is not desirable. In Signia devices, this was accomplished through two different algorithms referred to as Narrow Directionality and Spatial SpeechFocus. With Narrow Directionality, the directional polar pattern has a very narrow focus to the front (e.g., the look-direction of the hearing instrument wearer). The output of the hearing instruments is significantly reduced for all inputs falling outside of the narrow focus (Figure 2), even if they are located right next to the target speech source. This provided a SNR advantage not observed in previous directional instruments. This SNR advantage was easily achieved simply by the wearer looking at the talker of interest, as illustrated in Figure 2.

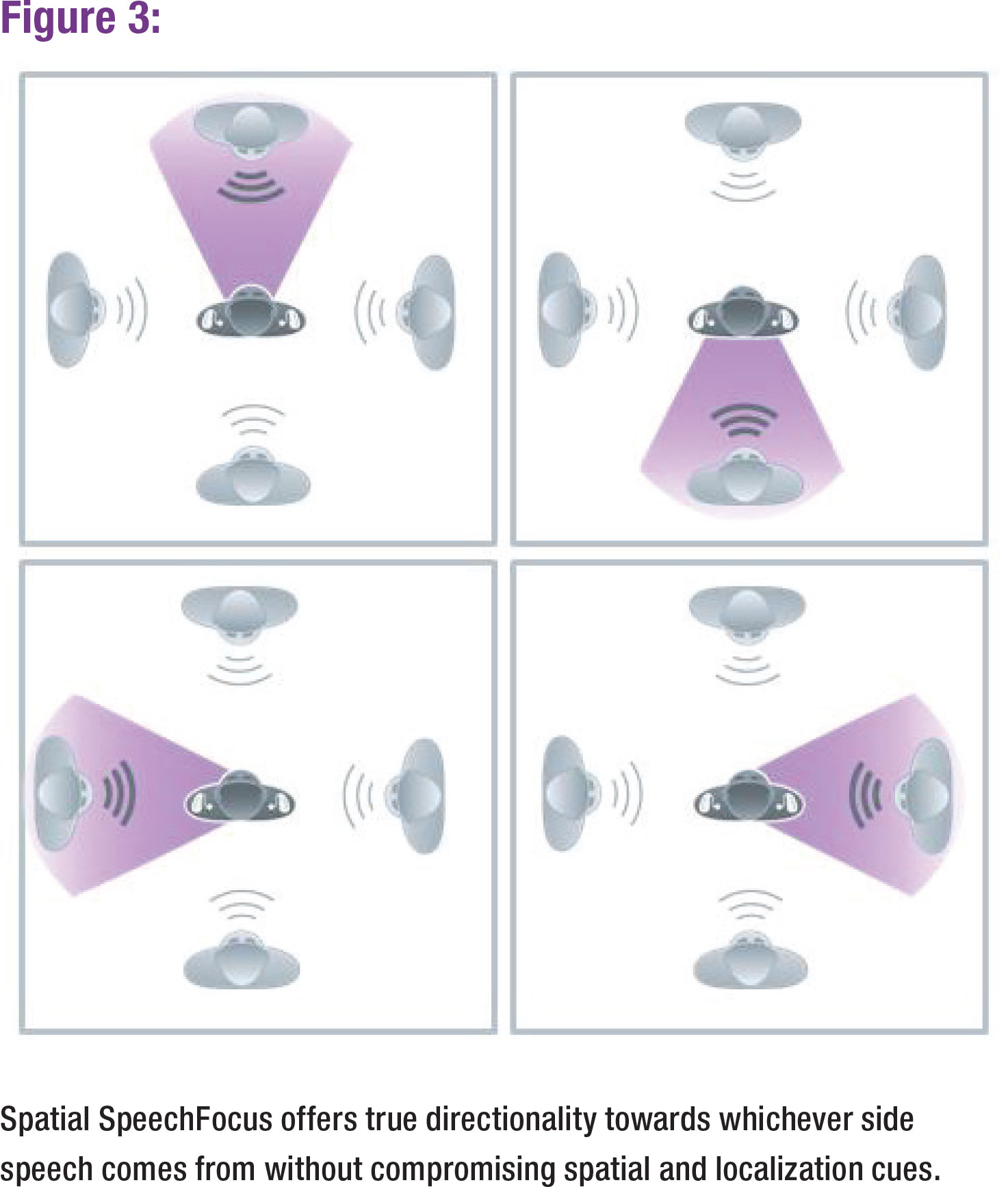

In contrast, the Spatial SpeechFocus algorithm was designed for the opposite speech-in-noise listening situation—when the listener cannot look directly at the target speech signal. This algorithm detects the location of speech (front, back, or either side) and adjusts the focus of the directional polar pattern to enhance this signal, which provides less output for signals from other spatial orientations (Figure 3). While it is understood that we may not restore optimal binaural processing for older individuals with hearing loss, there are methods to compensate for their diminished capabilities. The algorithms of the b=Binax hearing instruments, using binaural beamforming, were able to provide this compensation very effectively; to the extent that the hearing aid wearer could perform even better than their normal hearing counterparts for some speech-in-noise listening conditions (Powers & Beilin, 2013).

In contrast, the Spatial SpeechFocus algorithm was designed for the opposite speech-in-noise listening situation—when the listener cannot look directly at the target speech signal. This algorithm detects the location of speech (front, back, or either side) and adjusts the focus of the directional polar pattern to enhance this signal, which provides less output for signals from other spatial orientations (Figure 3). While it is understood that we may not restore optimal binaural processing for older individuals with hearing loss, there are methods to compensate for their diminished capabilities. The algorithms of the b=Binax hearing instruments, using binaural beamforming, were able to provide this compensation very effectively; to the extent that the hearing aid wearer could perform even better than their normal hearing counterparts for some speech-in-noise listening conditions (Powers & Beilin, 2013).

In early 2016, Signia launched the Primax platform. With this new platform and the power of wireless binaural data exchange, clinical studies from three leading hearing aid research centers showed a consistent trend: Primax features significantly reduced listening effort. The number of participants, speech material used, the SNRs applied to establish baseline, and the Primax features examined varied somewhat from site to site, but all sites used the same hearing instruments, objective EEG analysis, and subjective selfassessment judgments of listening effort. Research from one site revealed that speech recognition performance for hearing- impaired listeners (pure-tone average 35-60 dB) using the Primax Narrow Directionality algorithm was equal to that of normal-hearing individuals for the same speech-innoise task. Overall, the cumulative findings affirmed the desired Primax design goal of optimizing speech understanding while simultaneously reducing listening effort (Littmann, et. al. 2017).

In early 2016, Signia launched the Primax platform. With this new platform and the power of wireless binaural data exchange, clinical studies from three leading hearing aid research centers showed a consistent trend: Primax features significantly reduced listening effort. The number of participants, speech material used, the SNRs applied to establish baseline, and the Primax features examined varied somewhat from site to site, but all sites used the same hearing instruments, objective EEG analysis, and subjective selfassessment judgments of listening effort. Research from one site revealed that speech recognition performance for hearing- impaired listeners (pure-tone average 35-60 dB) using the Primax Narrow Directionality algorithm was equal to that of normal-hearing individuals for the same speech-innoise task. Overall, the cumulative findings affirmed the desired Primax design goal of optimizing speech understanding while simultaneously reducing listening effort (Littmann, et. al. 2017).

With the introduction of the Nx platform in 2017, Signia was able to harness “Ultra HD e2e” technology to address the “own voice issue” experienced by many hearing aid wearers. As all audiologists know, sound quality is vital for new hearing aid wearers who must adapt to hearing the world via a prosthetic device. Of course, one critical aspect of sound quality is the wearer’s own voice. Audiologists often resolve own voice complaints by reducing gain, with the unintended consequence of decreasing speech intelligibility. With the Signia Nx, the “own voice problem” was minimized -because of a novel technology called Own Voice Processing (OVP™). Traditionally, the audiogram is the basis for the hearing aid fittings. With OVP, the wearer’s voice is scanned by the hearing aid’s microphone system. This process adds a short additional step during the fitting (Figure 4). During the acoustic scan, the wearer simply talks for a few seconds and a threedimensional acoustic model of the wearer’s head and vocal pattern is created using machine learning principles. This model is used to detect if sound originates from the wearer’s mouth, or from an external source; a dedicated settingoptimized for naturalness and comfort is then applied when the wearer is talking. By separating the processing of the wearer’s voice from external sounds, it is possible to simultaneously achieve comfort and audibility. The benefits of OVP have been consistently proven in several independent clinical studies including own voice quality when the wearer is talking, allowing uncompromised audibility for external sounds, enhanced localization in soft, average, and loud environments without reducing the clinically proven performance of Narrow Directionality, and extended dynamic range preserving the integrity of the amplified signal, even in very loud environments (Froehlich, et. al, 2018).

With the introduction of the Nx platform in 2017, Signia was able to harness “Ultra HD e2e” technology to address the “own voice issue” experienced by many hearing aid wearers. As all audiologists know, sound quality is vital for new hearing aid wearers who must adapt to hearing the world via a prosthetic device. Of course, one critical aspect of sound quality is the wearer’s own voice. Audiologists often resolve own voice complaints by reducing gain, with the unintended consequence of decreasing speech intelligibility. With the Signia Nx, the “own voice problem” was minimized -because of a novel technology called Own Voice Processing (OVP™). Traditionally, the audiogram is the basis for the hearing aid fittings. With OVP, the wearer’s voice is scanned by the hearing aid’s microphone system. This process adds a short additional step during the fitting (Figure 4). During the acoustic scan, the wearer simply talks for a few seconds and a threedimensional acoustic model of the wearer’s head and vocal pattern is created using machine learning principles. This model is used to detect if sound originates from the wearer’s mouth, or from an external source; a dedicated settingoptimized for naturalness and comfort is then applied when the wearer is talking. By separating the processing of the wearer’s voice from external sounds, it is possible to simultaneously achieve comfort and audibility. The benefits of OVP have been consistently proven in several independent clinical studies including own voice quality when the wearer is talking, allowing uncompromised audibility for external sounds, enhanced localization in soft, average, and loud environments without reducing the clinically proven performance of Narrow Directionality, and extended dynamic range preserving the integrity of the amplified signal, even in very loud environments (Froehlich, et. al, 2018).

Split Processing

One of the most common complaints of hearing aid wearers is difficulty understanding speech in background noise (Picou, 2020). When determining how to best provide amplification to hearing aid wearers, each manufacturer designs algorithms for frequency shaping and “gain-for-speech” in the wearer’s soundscape. These algorithms offer the wearer some combination of directionality, noise reduction and compression – all in an effort to optimize speech intelligibility and listening comfort, while minimizing background noise.

Each manufacturer tackles these challenges in slightly different ways by using different combinations of directional microphone arrays, processed-based (spectral) noise reduction and compression. Some manufacturers use an algorithm designed to preserve speech by using broad focus (more omni-directional) directionality. This approach is often promoted for its natural sound quality; however, it relies on the wearer’s top-down auditory processing ability to extract speech in difficult listening situations. In contrast, other manufacturers use an algorithm designed to enhance speech by using narrow focus directionality to provide a better SNR in an effort to increase the gain of speech relative to extraneous noise and reverberation. This approach gives the wearer access to speech and ease of listening in noisy situations, however the overall sound quality can be negatively impacted by the heavy use of directionality and noise reduction. Each approach presents a compromise: Leveraging the wearer’s ability to use top-down auditory processing is limited in unfavorable SNR conditions or if the wearer is prone to listening-related fatigue to cognitive decline. On the other hand, using narrow directionality to manage the SNRcan detrimentally affect sound quality as well as the ability to localize.

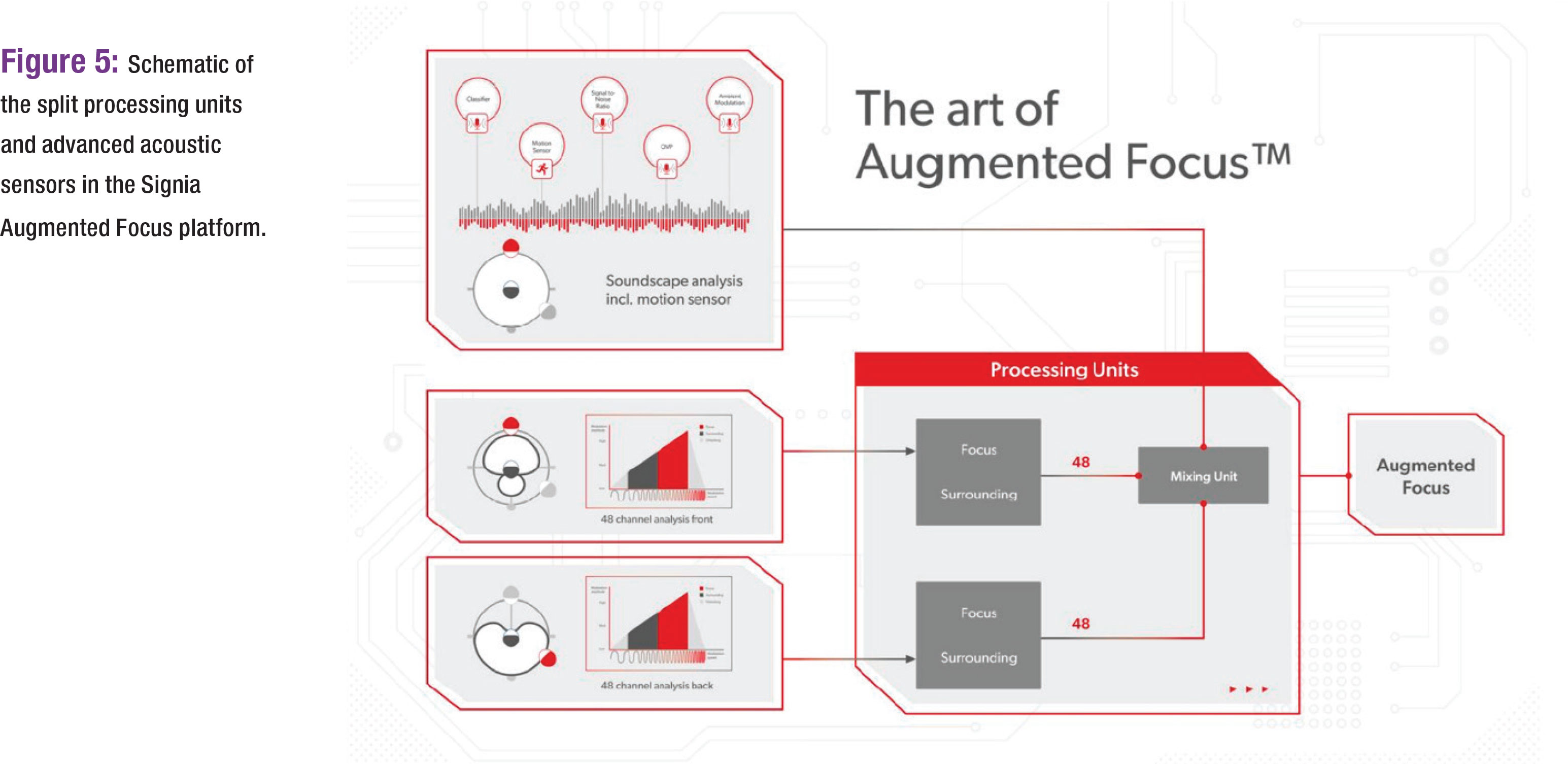

In 2021, using the latter approach described above, Signia launched the Augmented Xperience (AX) platform which leverages the unique aspects of bilateral beamforming technology to create two independent acoustic snapshots around the wearer. Each acoustic snapshot (one for the frontal hemisphere and one for the rear hemisphere) independently analyzes the wearer’s soundscape and applies gain, noise reduction, and compression to the input signals in each of the two snapshots. The front focus processor highlights speech using a more linear compression strategy, effectively providing more contrast for speech coming from the front of the wearer. The rear surround processor uses curvilinear compression to preserve the natural growth of loudness for surrounding sounds to help the wearer feel more aware of their surroundings. The information from both acoustic snapshots is then combined and the signal undergoes a soundscape analysis which employs sensors for SNR, motion, noise floor and own voice detection. This processing strategy is known as split processing and has been branded by Signia as Augmented Focus.

Enhancements Made Possible by Split Processing

In addition to providing improved speech understanding in adverse SNRs, the use of split processing also enables the implementation of certain advanced features.

Streaming Sound Quality

Devices that use split processing can offer a dedicated signal path for streamed signals, effectively a third dedicated processor, which uses tailors gain and compression for streamed music. This dedicated processing path can further be customized based on the source of the input signal (audio, television streamer or made for iPhone [mFi] Handsfree devices) to optimize the frequency shaping and microphone mix for different listening scenarios.

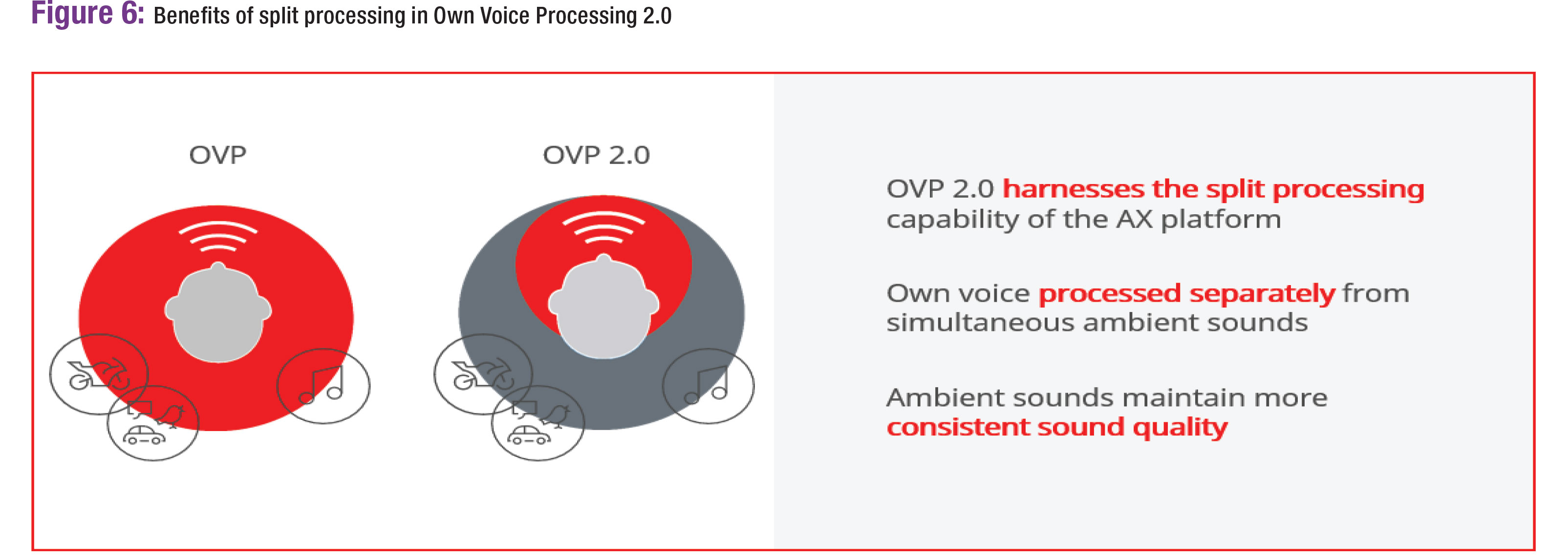

Own Voice Processing 2.0

Hearing aid wearers experience their own voice through two routes. The occlusion effect, from energy building up in a closed ear canal, and amplification of the wearer’s voice by the hearing aids, which is delivered into the ear canal. The result is an own voice sound quality that is sometimes too loud and uncomfortable. To adjust the fitting for these sound quality complaints, a provider could choose to increase the vent size or decrease gain, however both options compromise audibility for the wearer. This issue affects both new and experienced hearing aid wearers with 56% of experienced wearers, with an open fitting, shown to be dissatisfied with their own voice perception (Hengen, et al, 2020).

Signia introduced Own Voice Processing with the NX platform in 2017, which allowed the provider to calibrate the hearing devices for the wearer’s own voice. Once this calibration is completed during the fitting process, the devices can detect the wearer’s own voice and apply gain and compression settings to keep the wearer’s own voice comfortable. The compression of the wearer’s own voice releases rapidly, returning to programmed gain, when the wearer stops talking.

Own Voice Processing 2.0 was further improved using split processing in the Augmented Xperience platform, with the wearer’s own voice processed in the front focus processor and ambient sounds processed by the surround processor. This results in consistent sound quality for environmental sounds while providing a more natural sounding own voice quality. Own Voice Processing is supported by extensive research, showing improvement in own voice satisfaction and preference for OVP (Høydal, 2017; Froelich, et al, 2018, Jensen, et al., 2021). The benefits of Own Voice Processing extend beyond subjective improvements in sound quality. Research has also shown that 78% of participants reported increased communication with Own Voice Processing activated (Powers, et al 2018). This increase in social interaction can have a positive effect on neurological function and mental wellbeing (Friedler, et al., 2015). To further encourage increased social interaction, hearing aid wearers can access “My Conversations” within the Signia smartphone app which looks at how often and how long Own Voice Processing is activated, rating the wearer’s conversation frequency on a scale of Low, Medium, High, or Very High. This provides a tangible end-user benefit to encourage hearing aid use and social interaction.

Real Time Converstaion Enhancement with Multi-Stream Architecture

Picou (2022) reports that “conversations with large groups” and “following conversations in the presence of noise” continue to show low percentages of satisfied hearing aid owners. Both situations are difficult because dynamic conversation with multiple conversation partners cannot be isolated to a specific directional polar plot or acoustic snapshot. As people change positions or the hearing aid wearer moves their head, speech becomes harder to follow. Add in any significant background noise and the hearing aid wearer may become frustrated and withdraw from the conversation altogether.

Nicoras et al. (2022) identified several factors related to conversational success, such as “being able to listen easily”, “being engaged and accepted”, and “perceiving flowing and balanced interaction”. These findings indicate that conversation is a dynamic, free-flowing and multifaceted activity. Hearing aid wearers want to be able to hear and contribute actively to group conversations, even when they cannot turn to look at the person who is speaking.

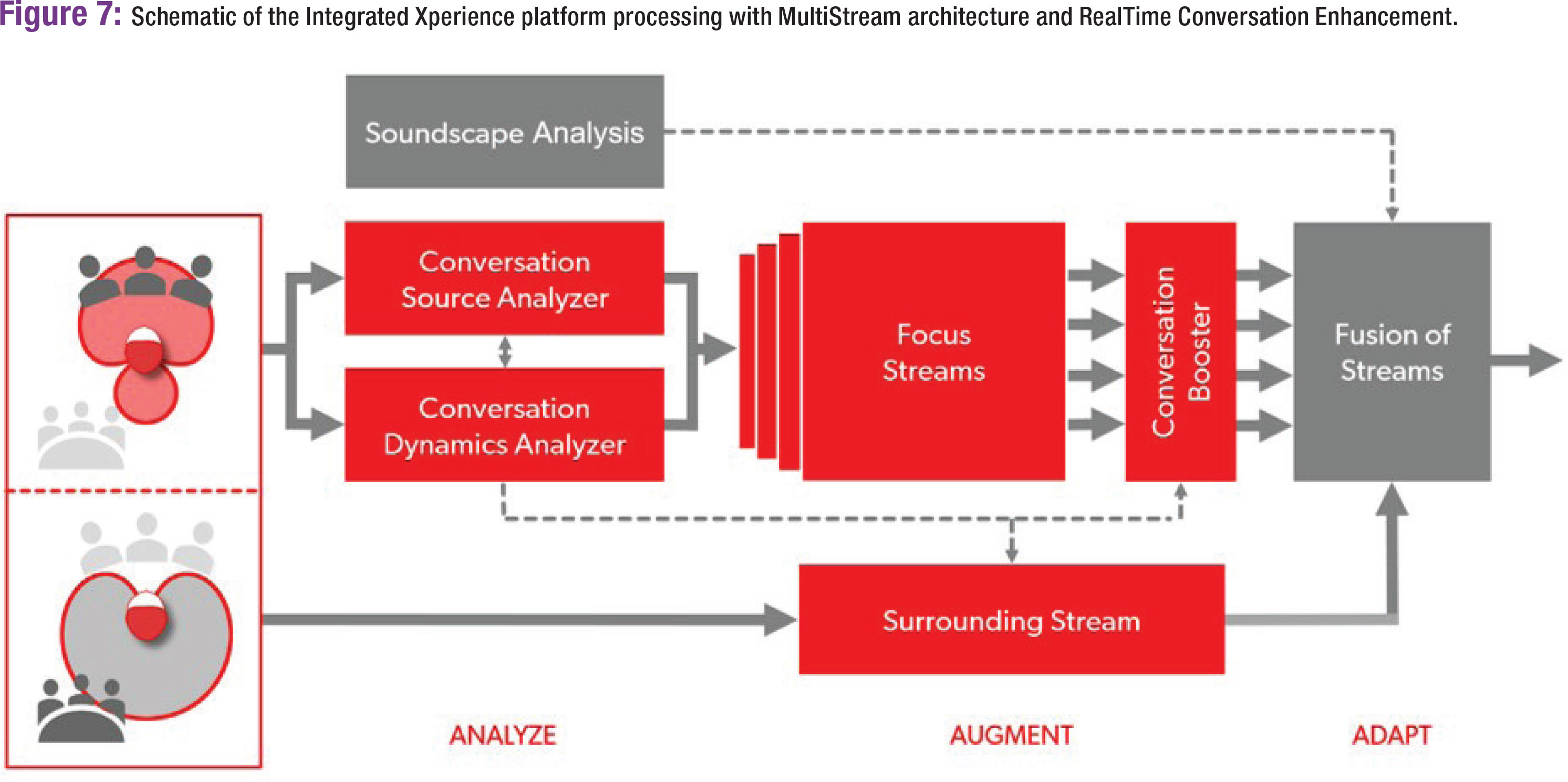

Hearing aids are now being designed to leverage these unique aspects of group conversations. One example of this is the Signia Integrated Xperience platform, which was launched in 2023. The IX platform uses an advanced analysis of the conversational layout of the wearer, called Real Time Conversation Enhancement (RTCE). This analysis is used to pinpoint and enhance multiple speakers in real-time using a three-stage process the attempts to identify speech and increase the gain of it once it has been identified.

Instead of dividing the wearers soundscape into a front and rear hemisphere, where each is processed independently, IX can divide the frontal hemisphere into three acoustic snapshots in addition to one rear snapshot. Speech is identified and gain is increased when speech is present in each of the four acoustic snapshots. This processing strategy represents a novel application of minimal-variance, distortion-less array microphones – technology that all manufacturers use, but that each implement differently. Signia is one of three manufacturers who implement it as part of a second order array, bilateral beamforming system.

Both benchtop and behavioral studies have been conducted with Signia Integrated Xperience with RealTime Conversation Enhancement to measure the patient benefit of this new processing strategy.

Jensen et al. (2023b) was able to compare the conversational signal-to-noise ratio benefits of Signia IX with RTCE to four premium receiver in canal (RIC) competitor devices using a Kemar mannequin and a simulated conversation scenario with two conversation partners in background noise. In this study, Signia IX with RTCE showed an overall output SNR of 11.8 dB, which was a 4.1 dB improvement over the next closest competitor device. It was also noted that there were benefits for speech coming from the front and the side of the wearer. This SNR improvement for speakers outside of the traditional directional polar plot allows wearers to participate in conversations without always facing the conversation partner.

Jensen et al. (2023a) were also able to conduct a behavioral study which supported the benefits of Signia IX with RTCE. Speech in noise testing was completed using the German Matrix test, the standard Oldenburger Statztest (OLSA; Wagener et al., 1999) and a modified OLSA, which was designed to simulate a realistic group conversation, with multiple speakers in front of the wearer and multiple noise sources behind the wearer. With RealTime Conversation Enhancement turned on, 95% of participants performed better in the modified OLSA test, suggesting that this feature can provide a clear benefit to wearers in group conversations.

As hearing aid technologies evolve, it is important to improve the wearer’s ability to participate in dynamic, spontaneous group conversations that keep them connected to the people that matter most. Advancements in wireless binaural technologies have been clinically proven to provide significant benefits to hearing aid wearers. Given that wearer satisfaction has improved more than 25 percentage points over a 20-year span suggests that all hearing aid manufacturers have effectively implemented innovations like near-field magnetic induction wireless technology. ■

References

- Dillon, H. (2001). Hearing Aids. 1st ed. New York: Thieme.

- Friedler, B., Crapser, J., & McCullough, L. (2015). One is the deadliest number: the detrimental effects of social isolation on cerebrovascular diseases and cognition. Acta neuropathologica, 129(4), 493–509. https://doi.org/10.1007/ s00401-014-1377-9

- Froehlich M., Powers T.A., Branda E. & Weber J. (2018). Perception of Own Voice Wearing Hearing Aids: Why “Natural” is the New Normal. AudiologyOnline, Article 22822. Retrieved from www.audiologyonline.com.

- Hengen, J., Hammarström, I. L., & Stenfelt, S. (2020). Perception of One’s Own Voice After Hearing-Aid Fitting for Naive Hearing-Aid Users and Hearing-Aid Refitting for Experienced Hearing-Aid Users. Trends in hearing, 24, 2331216520932467. https://doi.org/10.1177/2331216520932467

- Hornsby, B., Ricketts, T.A. (2004). Hearing aid user volume control adjustments: Bilateral consistency and effects on speech understanding. Unpublished manuscript.

- Hornsby, B., Ricketts, T.A. (2005). Consistency and impact of user volume control adjustments. Paper presented at the annual convention of the American Academy of Audiology, Washington D.C.

- Høydal, E.H. (2017). A New Own Voice Processing System for Optimizing Communication. Hearing Review, 24(11), 20-22.

- Jensen, N.S., Samra, B., Parsi, H.K., Bilert, S., Taylor, B. (2023). Power the conversation with Signia Integrated Xperience and RealTime Conversation Enhancement. Signia White Paper.

- Jensen, N.S., Pischel, C., Taylor, B. (2022). Upgrading the performance of Signia AX with Auto EchoShield and Own Voice Processing 2.0. Signia White Paper.

- Jensen, N. S., Wilson, C., Parsi, H., Taylor B. (2023) Improving the signal-to-noise ratio in group conversations with Signia Integrated Xperience and RealTime conversation Enhancement. Signia White Paper

- Jensen, N., Powers, L., Haag, S., Lantin, P., Høydal, E. (2021). Enhancing real-world listening and quality of life with new split-processing technology. AudiologyOnline, Article 27929. Retrieved from http://www.audiologyonline.com

- Jerger, J., Brown, D., Smith, S. (1984) Effect of peripheral hearing loss on the MLD. Arch Otolaryngol. 110, 290-296.

- Kamkar-Parsi, H., Fischer, E., Aubreville, M. (2014) New binaural strategies for enhanced hearing. Hearing Review. 21(10), 42-45.

- Littmann, V., Wu, Y.,H., Froehlich, M., Powers, T., A. (2017) Multi-center evidence of reduced listening effort using new hearing aid technology. Hearing Review. 24 (2), 32-34.

- Mackenzie, E., Lutman, M. E. (2005). Speech recognition and comfort using hearing instruments with adaptive directional characteristics in asymmetric listening conditions. Ear Hear. 26(6), 669–79.

- Mueller, H.,G., Hall, J., W. (1998) Audiologists’ Desk Reference, Vol. II. San Diego: Singular Publishing.

- Nicoras R., Gotowiec S., Hadley L.V., Smeds K. & Naylor G. (2022). Conversation success in one-to-one and group conversation: a group concept mapping study of adults with normal and impaired hearing. International Journal of Audiology, 1-9.

- Noble, W., Byrne, D. (1991) Auditory localization under conditions of unilateral fitting of different hearing aid systems. Brit J Audiol. 25(4), 237-250.

- Picou, E.M. (2020). MarkeTrak 10 (MT10) Survey Results Demonstrate High Satisfaction with and Benefits from Hearing Aids. Seminars in Hearing, 41(1), 21-36.

- Picou E.M. (2022). Hearing aid benefit and satisfaction results from the MarkeTrak 2022 survey: Importance of features and hearing care professionals. Seminars in Hearing, 43(4), 301-316.

- Powers T., Beilin J. (2013). True Advances in Hearing Instrument Technology: What Are They and Where’s the Proof? Hearing Review, 20 (1).

- Powers TA, Davis B, Apel D, Amlani AM. (2018). Own voice processing has people talking more. Hearing Review. 25 (7), 42-45.

- Ricketts, T., A. (2000) The impact of head angle on monaural and binaural performance with directional and omnidirectional hearing aids. Ear Hear, 21(4), 318-328.

Navid Taghvaei, Au.D. and Sonie Harris, Au.D. are both Senior Education Specialists for Signia.